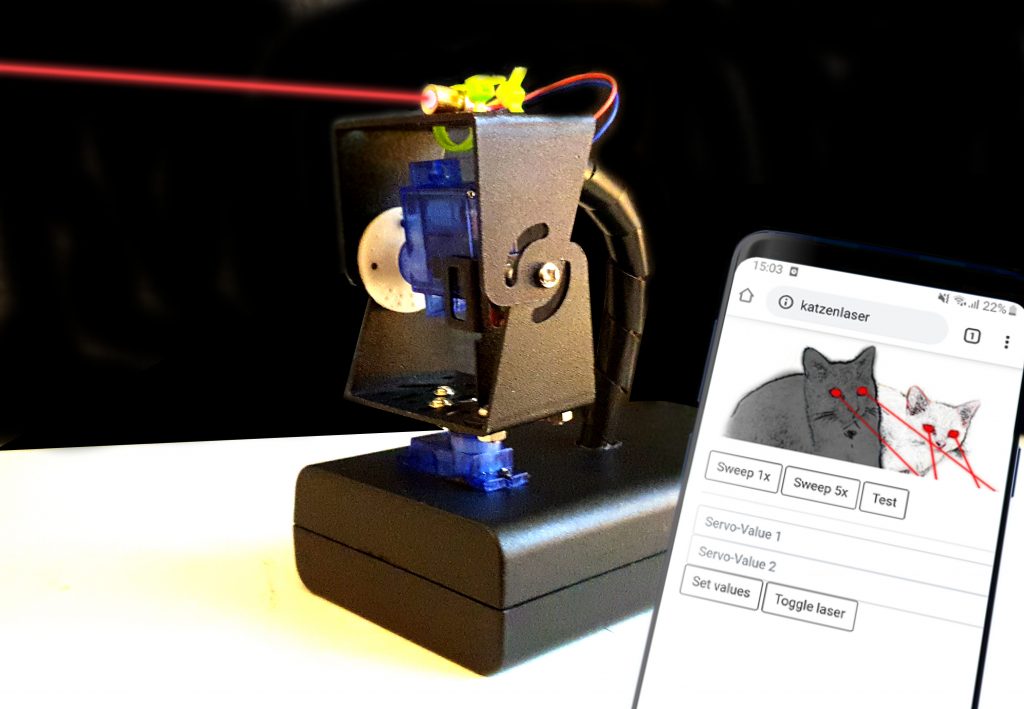

I finally am a full member of the internet – I am the proud servant of two cats! To keep them entertained when me and my wife are at work, I built a web-controlled laser-turret:

At its heart is an ESP2866 on an nodemcu amica v2, a cheap servo pan/tilt kit with small 9g servos and some no-brand laser-module rated for 5v and 40mA.

In this build-log I will write about the process of creating it.

The code and all files I used can be found in my github-repo: https://github.com/TobiasWeis/cats_and_lasers.

Neueste Kommentare