The raspberry pi will be the main processing unit of my pypibot. I want to power 6v-motors, so I decided on going with a 7.2v battery-pack. I had one lying around with 2600mAh, which should be enough for testing the setup right now.

I originally planned on going the easy way and ordered a converter from 8-36v to 5v, with a micro-usb-connector already wired (from DROK). Without any other load this worked nicely, although the input voltage was below 8v. But as soon as the motors have been wired up, the voltage would drop too low for this thing to still output 5v, and in consequence the pi went down.

So, here is the definite way to go if you want to power a raspberry pi in a robust way from a 7.2v battery (or anything above that voltage):

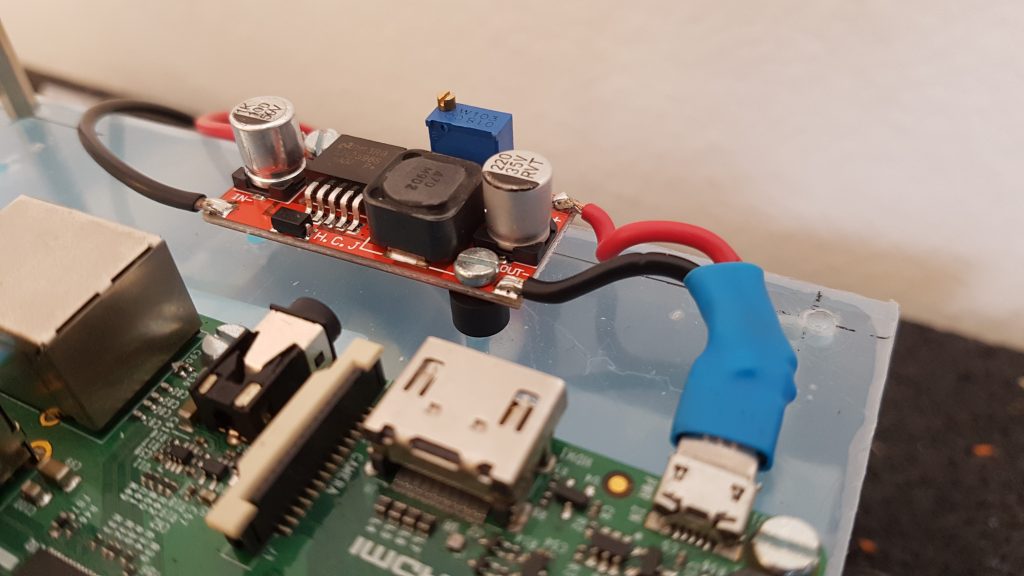

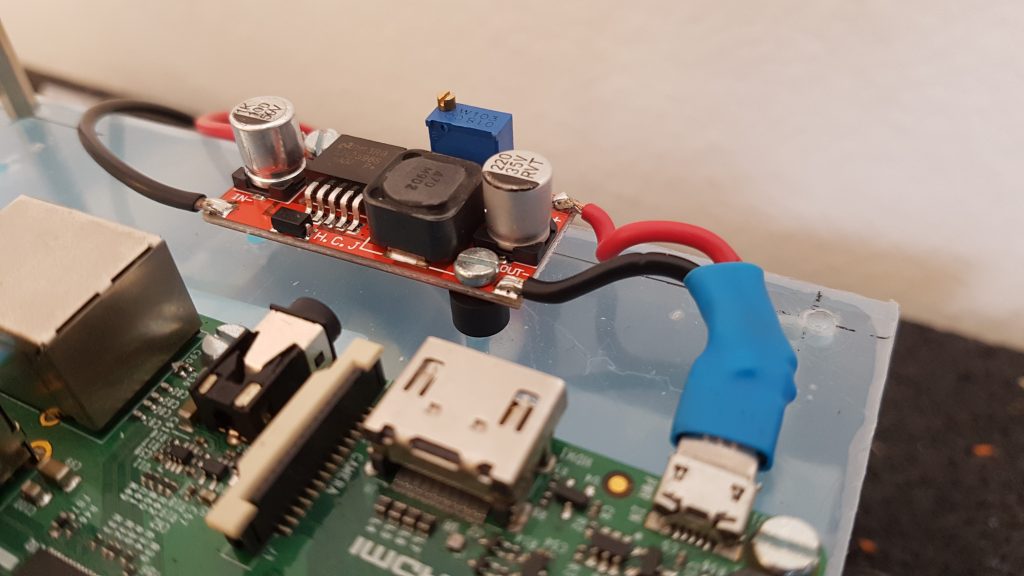

Using an adjustable DC/DC power converter! While these units cost little over 5 EUR (for 5 units in total), they take anything from 4V – 35V as input, and the output voltage can be configured by turning a little screw on a potentiometer. In my experiments, the voltage could drop as low as 6.1v, and this unit would still supply a rock-steady 5v to the pi (once setup, it will deliver steady 5v on the output for a wide range of voltages actually). They can withstand 3A max, which should be enough for the raspberry pi and any sensor I hook up to it.

I ended up soldering a micro-usb-connecter to it myself:

In a first testrun with the 7.2V, 2600mA NiCd battery pack I had lying around (it is quite old, so it probably has a far lower capacity than that), the Raspberry Pi lasted 1 hour and 42 minutes, while driving around with the motors from time to time: up 1:42, load average: 0.48, 0.33, 0.33.

Neueste Kommentare